UNIVERSITY OF HOUSTON CLEAR LAKE

SCHOOL OF EDUCATION

ASSESSING THE IMPACT OF THE NASA JOHNSON SPACE CENTER

DISTANCE EDUCATION AND MENTORING PROGRAM, TEXAS AEROSPACE SCHOLARS

(YEAR ONE, 2000 - 2001)

By RITA KORIN KARL

A Project Proposal submitted to the

School of Education

in partial fulfillment of the

requirements for a degree

of Master of Science

Approved:

________________________________

James Sherrill, Ph.D.

Associate Dean

________________________________

Atsusi Hirumi, Ph.D.

Associate Professor, Project Supervisor

________________________________

Glenn Freedman, Ph.D.

Education Faculty, Committee Member

September, 2001

Abstract

To meet the growing demand for a high-tech workforce, the Texas Aerospace Scholars (TAS) aims to encourage 11th grade students to consider careers in math, science, engineering or technology. This study evaluates the initial impact of TAS on student’s future career choices. Extant data, collected by the National Aeronautics and Space Administration (NASA) Johnson Space Center (JSC) during the first year of the program, will be analyzed to evaluate two primary program features (a) the on-line distance education science and engineering curriculum, and (b) the mentoring relationships between NASA engineers and scientists and students. The students’ attitudes toward the program and engineering as a career, their choice of colleges and intended majors will be assessed. The results of the summative evaluation will be used as a basis for making decisions about program continuation.

Table of Contents 2

List of Figures and Tables 3

Chapter 1. Introduction 5

Introduction 5

Project Background 6

Problem Statement 10

Significance 13

Chapter 2. Review of Literature 15

Science and Engineering Education Programs 15

Mentoring Programs 19

Mentoring Gifted High School, Female and Minority Students

23

Implications for Program Evaluation and Continuous Improvement

30

Distance Education 31

Distance Education for Gifted Students 31

Web-based Education Programs 33

Web-based Science Education Programs 35

Web-based Mentoring Programs 41

Implications for Program Evaluation and Continuous Improvement

44

Program Evaluation 45

What is Program Evaluation? 45

Forms, Approaches and Types of Educational Evaluation

48

Program Evaluation Procedures 60

Validity and Reliability of Results 73

Implications for Program Evaluation and Continuous Improvement

76

Chapter 3. Method 78

Population and Sample 80

Research Design 83

Instruments 84

Data Collection Procedure 88

Data Analysis 90

Limitations 93

References 95

Appendix

A - National Science Foundation Science and Engineering

Indicators 103

B - National Merit Scholars Planned Majors 113

C - Joint Committee Standards on Program Evaluation 116

D - Student Application, Commitment Form, Talent Release

For 121

E - TAS Databases 127

F - Data Analysis Outline 129

G - TAS Evaluation Instruments 132

List of Figures and Tables

Figure 1. Program components.

Figure 2. Texas Aerospace Scholars' assumptions and target impacts.

Figure 3. NASA Project development cycle.

Figure 4. Texas Aerospace Scholars student profile.

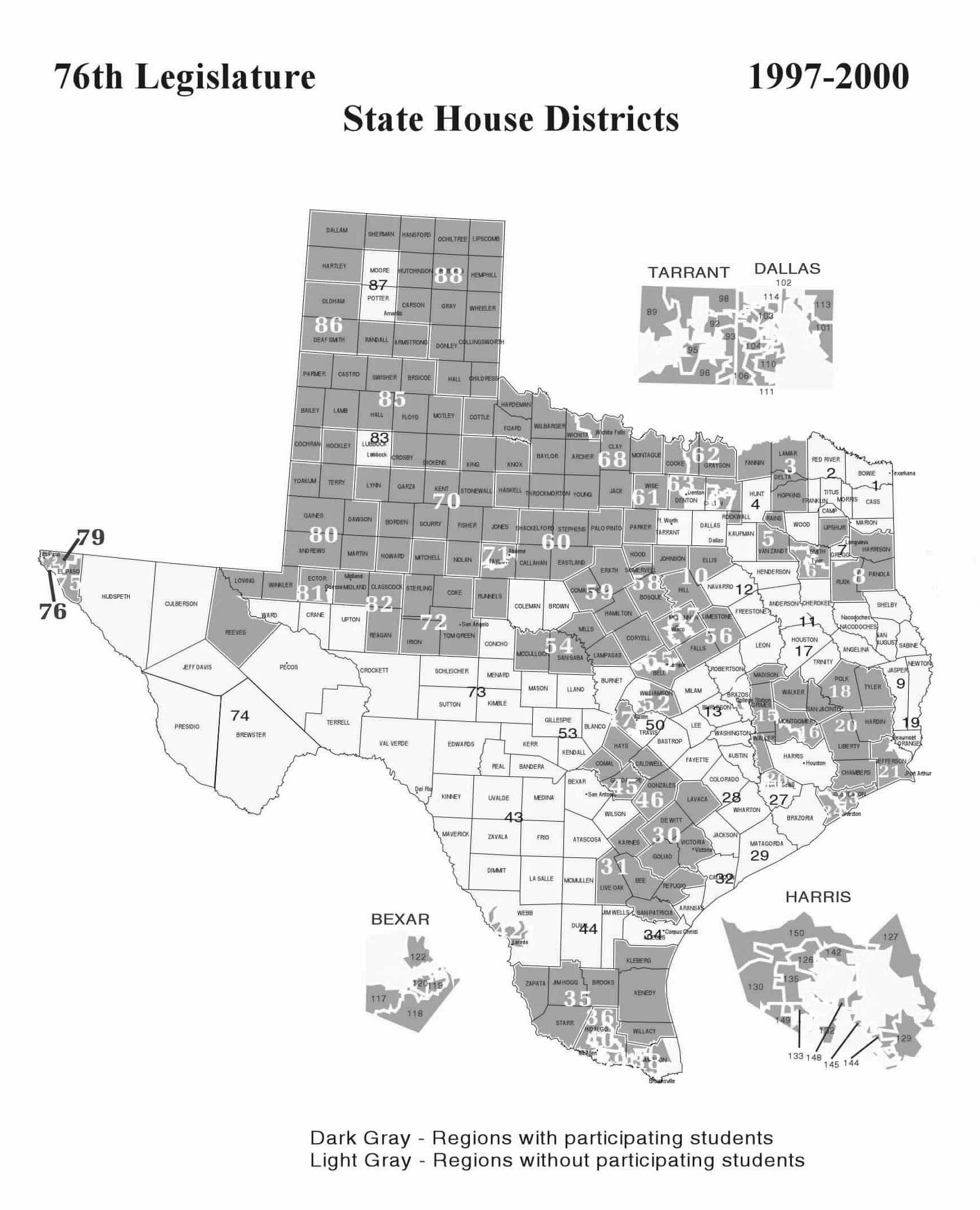

Figure 5. Texas Aerospace Scholars district representation - House

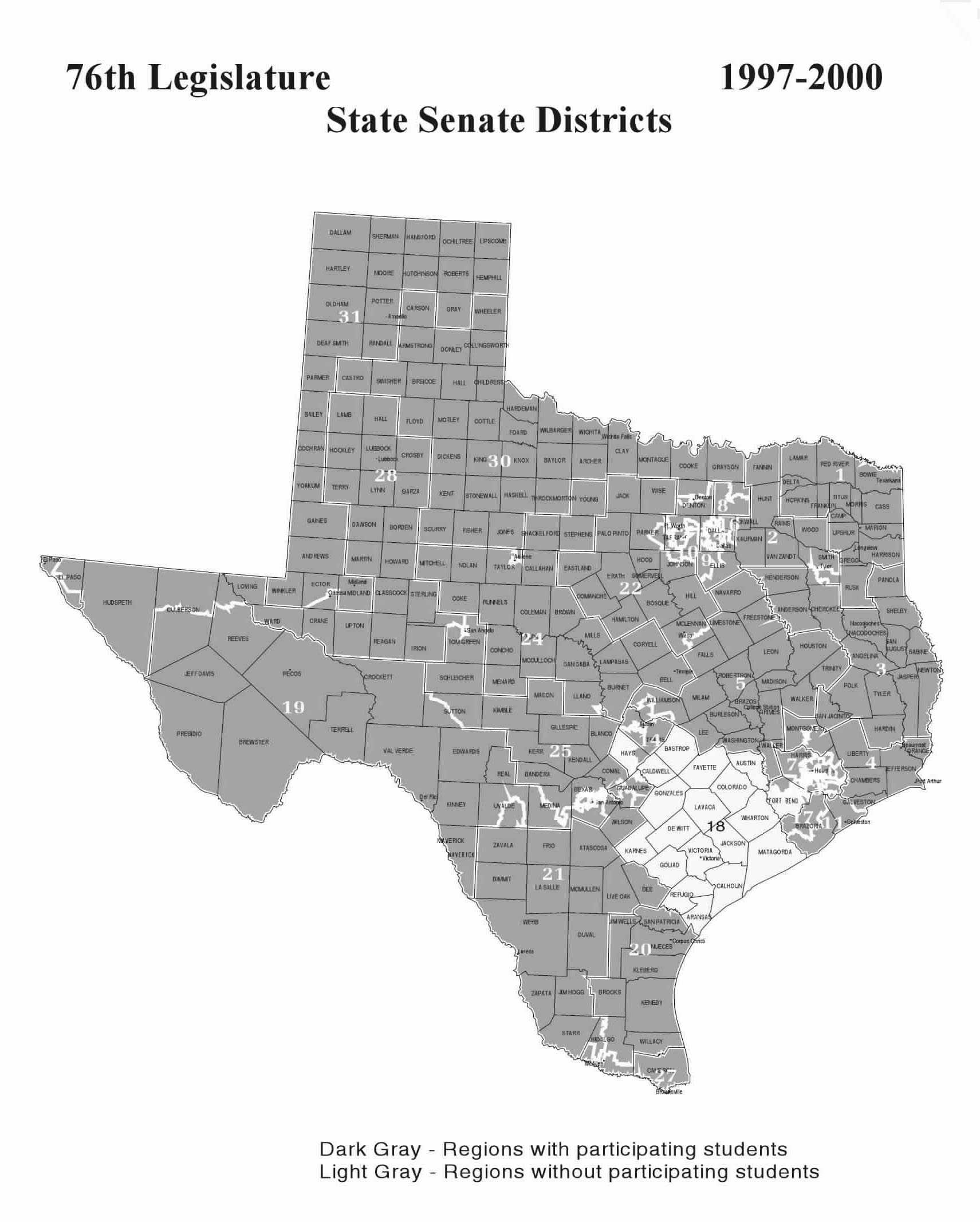

Figure 6. Texas Aerospace Scholars district representation - Senate.

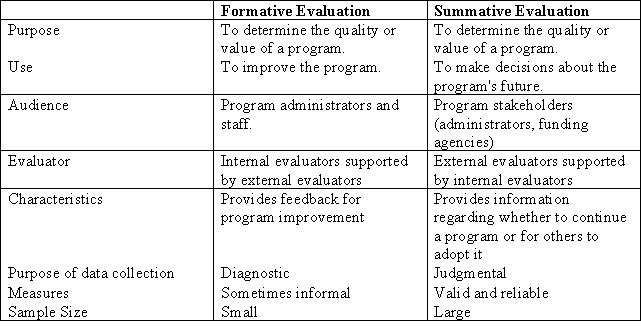

Table 1. Differences between formative and summative evaluation.

Table 2. TAS timeline and data collection points.

Chapter 1

Introduction

According to the National Science Foundation (1998) the number of available jobs in science and engineering is growing faster than the number of college students pursuing degrees in these fields. Current national statistics show that 1 in 10 positions are vacant in high-tech industry. Projections show that from 1998 to 2008 the number of positions for computer scientists is expected to increase by 118%, for computer engineers by 108% and for engineering, mathematics and natural science managers by 44% (National Science Foundation, 1998) (Appendix A).

To meet the growing demand for a high-tech workforce, the Texas Aerospace Scholars (TAS) aims to encourage 11th grade students to consider careers in math, science, engineering and technology through a distance education and mentoring program. Funded by the State of Texas, NASA’s Johnson Space Center and two private organizations (Rotary NASA and the Houston Livestock Show and Rodeo), TAS is designed to harness the excitement of the space program and encourage gifted students to choose science, engineering or technology as a career. Although there are other space science education programs for gifted scholars, TAS is NASA Johnson Space Center's (JSC) first program to involve distance education, face-to-face and on-line mentoring. NASA has accumulated a large amount of data regarding the program, both numerical and anecdotal. The problem is that little has been done to synthesize and analyze the data. A comprehensive view that incorporates all of the data submitted by students, staff, mentors and educators involved in the program would help to paint an accurate portrayal of the first year of the program.

JSC is committed to a four-year investigation of the TAS program. This study evaluates the initial impact of TAS on student’s future career choices by analyzing qualitative and quantitative extant data collected during the first year. The study focuses on the program’s two key features (a) the on-line distance education science curriculum, and (b) the mentoring relationships between NASA engineers and 11th grade students. Student evaluations, grades, testimonials and surveys will be used to assess students’ attitudes toward the program, engineering as a career, on-line Internet activities, and on-site mentoring workshop. Students’ choice of colleges and intended majors will also be measured. The results will be used as a basis for making decisions regarding program improvement and continuation. Additional research studies at two, three and four-year increments are planned to give a full and accurate picture of the program’s impact.

Project Background

An on-line distance education and mentoring program was

chosen to provide access to the greatest number of students. The summer

workshop was included to allow students direct contact with their mentors.

Every Texas legislator may nominate one or two outstanding high school

juniors, from a pool of students recommended by their respective high schools,

to participate in TAS. Interested scholars are nominated based on their

academic standing and an interest in science, math, engineering and technology.

Utilizing exciting, hands-on interaction with the space

program, TAS aims to inspire students to consider engineering careers.

Almost every aspect of the program represents an innovation in United States’

secondary education including:

The TAS curriculum encourages higher order thinking skills, problem solving, creative design, teamwork, and mentoring with NASA engineers. Scholars are required to be U.S. citizens, at least 16 years of age and have access to the Internet and e-mail. Scholars then attend a one-week summer workshop in June or July at the NASA Johnson Space Center in Houston, Texas.

The summer workshop utilizes the knowledge and experience gained from the distance education curriculum by placing scholars in teams with NASA engineering mentors who provide positive role models. The students work cooperatively in teams alongside their mentors to design a human mission to Mars. A variety of briefings and field trips with astronauts, mission controllers, scientists and engineers fill out the week. At the end of the week, students present their mission to an audience of NASA administrators, parents and Texas legislators. After the summer workshop, all mentors are encouraged to maintain contact with students to provide continued support and advice in a unique one-on-one interpersonal bond.

All scholars complete a NASA Commitment Form that stated

that they were committing to a four-year follow-up research study and a

Talent Release form that allows NASA to use scholar video and audio interviews.

Students completed one test after each of six assignments, a program evaluation

and post-program surveys. The student tests, interviews, evaluations, surveys

and unsolicited scholar testimonials will guide the summative evaluation

of the program. While the full impact of the program can only be assessed

through a longitudinal study completed with scholars over time, initial

milestones can be assessed. The final outcome measure of the success of

the TAS program will be whether participants choose to remain in science

and engineering through-out their college years and actual enter the workforce.

Prior to this final outcome milestones can be set for each year. These

are, at the end of year one a choice of college and intended major, in

year two and three, continuing to remain in a science or engineering major,

and ultimately in year four a choice of occupation or graduate school.

Figure 1 outlines the six major program components and the element of time.

Figure 1. TAS Program components.

Statement of the Problem

In 1992, approximately 40 percent of all National Merit Scholars were interested in majoring in either the natural sciences or engineering (National Science Foundation, 1992, see appendix B). Despite high levels of freshmen intentions for a science and engineering major, the percentage of students majoring in natural science, mathematics, and engineering fields declines from 27 to 17 percent between freshman and senior years (National Science Foundation, 1993) (Appendix A). The Commission for the Advancement of Women and Minorities in Science, Engineering, and Technology Development (CAWMSET) note that the shortage of skilled workers in high-tech jobs may lead to an economic crisis unless more under-represented individuals pursue education and careers in science, engineering and technology (CAWMSET, 2000). To address these concerns JSC considered the type of students likely to enter into these fields, and concluded that outstanding scholars with an interest in science, mathematics, engineering and technology were most likely to major in these fields.

Certain assumptions were made regarding how to identify, target, and cultivate these students. An early intervention program for high school scholars in their junior year was developed. For the delivery of the program, several assumptions were made. To attract teenagers, a science and engineering curriculum would need to be challenging, highly interactive, and result in a sense of accomplishment. In addition, the creation of an environment that would support these gifted scholars was deemed paramount to the success of the program. Mentoring by actual scientists and engineers working at NASA was considered as the best way to maintain the student's interest and commitment to a course of study (and a career) in these fields over time. Integral to the development of the curriculum was an assessment of the learners themselves and their learning styles. Gifted students were assumed to have a high degree of proficiency with technology and the Internet, and a Web-based distance education program was devised based on adult learning principles.

NASA’s Educational Policy states "We involve the educational community in our endeavors to inspire America's students, create learning opportunities, and enlighten inquisitive minds" (NASA, 2001). The NASA Education Program Mission Statement reads "NASA uses its unique resources to support educational excellence for all" (NASA, 2001, para. 4). TAS scholars are 42% female with 26% minorities from across the state of Texas. Although other science and engineering programs have been developed for outstanding scholars, and mentoring programs geared to affect scholar's future career choices have been developed, this program is unique in that it integrates both distance education and mentoring, on-line and in real-time, by peers and professionals.

The specific problem addressed by this study is that NASA

has gathered considerable amounts of data about TAS, but little to no effort

has been made to organize, analyze the report the data. The purpose of

this study is to organize and examine data already collected by NASA during

the first year of the program. Specifically, the study will evaluate scholars’

attitudes, achievement, choice of colleges and intended majors. The study

will also examine important decisions made by the instructional technology

designer and NASA education staff about the program (e.g., Was it beneficial

to choose the Internet over other methods of education delivery? Include

mentoring as an integral component of the program? Address the needs of

gifted scholars over other candidate? Use on-line evaluation, video interviews

and on-line post-program surveys?) Important impact questions will also

be addressed, such as: Does TAS help gifted scholars make informed college

and career choices? Does it encourage scholars to look at the math, science,

engineering and technology fields with certainty? Does it help to foster

their decisions regarding these choices by providing role models and visible

options to these students? Is distance education without doubt the best

way of reaching these students? Figure 2 illustrates the assumptions made

by NASA and the instructional designer and the measurable impacts over

time.

Figure 2. Texas Aerospace Scholars Assumptions and Target Impacts.

Significance

This study will benefit three particular groups: (a) key stakeholders (entities with a vested interest in the increase of engineers in the workforce); (b) instructional designers (who are looking for Web-based models that utilize mentoring), and (c) other educational organizations (who are looking for model Web and mentoring programs).

Key stakeholders including the State of Texas, NASA, The

Houston Livestock Show and Rodeo and Rotary NASA who want data regarding

the program's impact on student's attitudes towards careers in science,

engineering, and technology to guide continuing funding decisions. In addition,

several other states and at least one other country are looking at the

TAS program as a role model. The results of this preliminary evaluation

may have an impact on other sites and other industries considering replicating

the program to meet the need for future high levels of scientists and engineers

entering the workforce.

Instructional designers who are interested in distance

education and online science education and mentoring programs can utilize

the program evaluation when considering aspects of programs to replicate

or not to replicate. The program and evaluation can serve as a blueprint

for other curriculum designers and educators to use should they encounter

a similar need for an interactive Web-based educational and mentoring program.

Although Web-based distance education is still in its infancy many program models such as cyberschools, virtual schools and educational Web-sites have blossomed in the past few years. Most of them are aimed at high school students. Some are aimed at gifted scholars, and a few utilize mentoring. TAS attempts to incorporate effective instructional design models and utilize cutting edge technology and mentoring techniques. This project will help bring together all of the extant data that has been accumulated during the first year of the program, organize and analyze it, and present findings aimed at determining the future improvement and continuation of the program.

Chapter one provides a brief introduction to TAS – its

purpose, basic design and significance. Chapter two reviews literature

related to science and engineering education, mentoring gifted scholars,

using distance education with high school students, and program evaluation

– each of the major variables under study. Chapter three outlines the method

to be employed in the program evaluation.

Chapter 2.

Review of Related Literature

This chapter reviews literature related to the major variables under study and is divided into three major sections. Section one reviews literature related to science education and mentoring programs for high school students. Section two reviews literature on distance education for high school students, focusing on Web-based programs and section three reviews literature on program evaluation approaches and procedures. Implications of findings derived from the reviews are discussed at the end of each major section.

Space Science and Engineering Education

A variety of engineering programs exist for high school students, several of them target gifted scholars and several involve mentoring. Several are targeted to women and minorities, and a few involve computer technology or distance education. This section gives an overview of high school science and engineering programs, and similar programs that utilize mentoring. It concludes with a section on the benefits of mentoring gifted students, including minorities and young women.

A variety of science and engineering intervention programs exist for high school students across the country. In 1976, The Science, Engineering, Communications, Mathematics Enrichment Program (SECME) began a teacher training summer institute at the University of Virginia. Teachers who have attended the SECME summer institute bring hands-on science and engineering back to their predominantly minority classrooms. SECME students work collaboratively on projects side-by-side with their teachers. Mousetrap car competitions, bottle rockets, and egg-drop engineering designs are used to bring physics, calculus and engineering principles to students.

Seven southeastern university deans formed SECME in 1975 concerned about the dearth of minorities in high technology fields, which they believed was the key to the nation’s economic health (Hamilton, 1997). The university’s engineering and education faculty designed the courses along with alumni teachers. Teachers brought teams of students in for hands-on mousetrap car competitions and took home curricula for integrating computer technology and engineering into the classroom.

SECME involved 37 participating universities and 65 industry and government partners (including NASA and Lockheed Martin). Currently, a total of 84 school systems, 583 schools and nearly 30,000 students from 15 states participate in SECME. Since 1980, nearly 50,000 SECME seniors have graduated from high school and 75% with the goal of attending a four-year college. Average SAT scores are 147 points higher than the U.S. African American average, and 97 points higher than the U.S. Hispanic average. Half of the students planned to major in science, math, engineering and technology fields (Hamilton, 1997).

In 1996, a comprehensive engineering program began at Madison West High School near the University of Wisconsin. The course, Principles of Engineering is offered to high school sophomores, juniors and seniors. The course was developed with a grant from the National Science Foundation. The course explores the relationship between math, science and technology. Students keep daily logs as any engineer or scientist. The course shows students important engineering concepts and has them work on real-world case studies resembling engineering problems. Students are given a problem to solve and choose their own methods.

To aid in the development of their solutions students are exposed to brainstorming, thumbnail sketching, and various problem-solving techniques. After developing solutions on paper students choose one solution to prototype. Engineering systems and design principles are addressed including functionality, quality, safety, ergonomics, environmental considerations, and appearance. The program was developed to encourage students to consider careers in engineering as a response to the significant labor shortage faced by the U.S. in technology professions (Gomez, 2000).

The Uninitiates' Introduction to Engineering (UNITE) in cooperation with the U.S. Army is a summer program for minority students who want to pursue their interest in engineering and technology study and build their math and science knowledge and skill. It is offered on five university campuses throughout the country in Michigan, New York, Florida, New Mexico and Delaware. The UNITE program has been sponsored by the United States Army since 1980. It is an aggressive program for high school students that encourages and assists students in preparing for entrance into engineering schools. Each year UNITE coordinators identify talented high school students with an aptitude for math, science, and engineering to provide them the opportunity to participate in college-structured summer courses. The courses combine hands-on applications, participation in lectures, laboratories, and problem solving as well as tours of private and governmental engineering facilities. The students are introduced to ways in which math and science are applied to real-world situations and are related to careers in engineering and technology (UNITE, 2001).

The Mathematics, Engineering and Science Achievement (MESA) program was designed to create a curriculum-based program catering to the specific career to the specific academic or career interests of students. Created in 1970, in Oakland, California, eight states currently participate in the program. MESA boasts that 80% of its graduates go to college the fall after they graduate (compared to 57% of students in those states). It also boasts that 80% of under-represented students who receive bachelor’s degrees in engineering at the 23 institutions where MESA is situated are MESA students. MESA targets African American, Hispanics, American Indian, and female students (Rodriguez, 1997).

MESA provides opportunities in mathematics, engineering and science for students in grades 7–12, helping them to prepare for college level studies in science and technology fields. Activities include SAT workshops, field trips, speakers, competitions, classroom enrichment activities, and college preparation. Weekend academies, summer camps, satellite teleconferencing, teacher curricula, in-service and after school enrichment programs, parents programs, teacher training, student visits to college campuses and student competitions are some of the programs MESA centers engage in. Universities like Berkeley's College of Engineering and Johns Hopkins University's Applied Physics Laboratory are just two of MESA's high profile facilitators.

At the Johns Hopkins MESA site, components include academic tutorials, counseling, field trips, incentive awards, communication skills, science fair and engineering projects, and math competitions and computer use. Students are counseled to enroll in high school courses prerequisite for college entry for majors in science and engineering. Engineers, scientists, mathematicians, and college students provide academic tutorials in math, science, English, and communication. Visits to colleges and industry allow students to interact with professionals and observe the scientific community. Students engage in various scientific and engineering projects throughout academic year. Incentive awards are given periodically to students who meet program goals and maintain a "B" average or better (Johns Hopkins University, 2001).

Characteristics of all four of these programs include hands-on activities and projects, interaction with professionals in the field, pre-college tutorials, site visits, and real-world problem solving. The use of technology tools and design competitions focus students on higher-order thinking, teamwork, and technology skills by applying them creatively to real problems. Key factors of the science programs reviewed in this section will be used to guide the design of the program evaluation and to guide future program improvement.

Mentoring Programs

A variety of science and engineering programs include mentoring for high school students. As in many of the MESA programs (e.g., Johns Hopkins), the perceived importance of utilizing science and engineering professionals as tutors and role models has resulted in a variety of programs that utilize mentoring specifically to encourage students to consider scientific and technological career paths. This section gives an overview of seven science and engineering programs for high school students that utilize mentoring.

Located in Los Angeles, the California State University-Dominquez Hills teamed up with industry and 11 school districts to form the California Academy of Mathematics and Science, a public high school designed to attract students interested in math, science and engineering. Of all the students, 52% are female, 29% are Asian, 28% Hispanic, 28% African American, and 15% are white. The school selects from students in the top 35% who indicate a strong interest and potential in math and science. Industry contributions account for about 16% of the school’s budget.

Industry also provides an extensive mentoring program of about 150 scientists and engineers paired up one-to-one with students. The mentors meet with students once a month and stay in contact with students throughout their high school experience. Industry partners also offer internships to students to provide a hands-on experience in science and engineering careers. Approximately 90% of the students work as interns in their junior year. The National Science Foundation and the Sloan Foundation support the internship and mentoring programs. The university also provides professors as mentors, teachers, and curriculum developers. So far the program reports the school has graduated two classes (230 students) with 99% going on to 4-year colleges, and 75% majoring in science and engineering (Panitz, 1996).

PRIME is the Pre-college Initiative for Minorities in Education. It is designed to encourage African American college-bound high school graduates to pursue engineering as a career. The Tennessee Technological University program involves academic courses in mathematics, engineering, seminars, tours and tutorials. The key component of this program involves using undergraduate engineering students as mentors and role models for these young students. The impact of the mentors on their younger peers plays a significant role in the success of PRIME (Marable, 1999).

To enhance the participation of minority students in engineering, Tennessee Technological University (TTU) established the Minority Engineering Program in 1986. For minority students is a key component for success in navigating through engineering programs in predominantly white institutions. To attract these talented minority students to TTU, a summer ‘’bridge’ program was developed. The six-week summer program aims to address some issues identified as affecting minority students including academic preparation, inadequate peer support, lack of role modeling and mentoring, and racism, among others (Marable, 1999).

The program aims to reduce the stress of high school to college transition by building confidence and self-esteem, providing minority students with mentors, and developing academic skills. 20 students study mathematics and engineering, take field trips, and complete seminars on the use of computers, test taking and study skills. Undergraduate students work with students on a daily basis and the relationships continue throughout the first year of college. The American Council on Education states that the most effective means whereby minority students can be mentored is through peer counseling (Marable, 1999). Other studies argue that peer support is an important key to retention, helping students cope with stress levels often associated with a major like engineering (Marable, 1999).

TTU undergraduate mentors share their knowledge and experience of engineering with a similar social, ethnic and cultural background. During the first two years of the PRIME program all of the program participants remained in engineering. The students reported that their knowledge of engineering was enhanced and they were better prepared for college course work. Students described their mentors as "a great help," and "they encouraged us to strive toward getting the degree because it will result in a good-paying job." Other comments included "we are still really close," "they would say work hard, it pays off in the long run," and "they were role models for us…they taught me to be professional" (Marable, 1999, para. 23-25). It is believed that the student-mentoring component provided social and emotional support for students to persist in majors like engineering. "The ultimate success of PRIME rests on the number of participants who become successful engineers" (Marable, 1999, para. 26).

The New Mexico Comprehensive Regional Center for Minorities with a grant from the National Science Foundation established an outreach program for high school students with disabilities including summer computer institutes for middle and high school students. The group sponsors numerous programs including summer camps that introduce high school seniors to college life, stipends for disabled college students to serve as mentors to pre-college students with disabilities. It is believed that the mentor program is critical to the success of the program (Coppula, 1997). One mentor agreed noting that discrimination and academic difficulties discourage many high school students with disabilities from pursuing engineering. "We get them tutoring and answer their questions…we make them feel good about themselves, show them someone cares. It gives me a great feeling of accomplishment" (Coppula, 1997, para.8).

The Junior Engineering Mentoring Program at the Lacey High School in New Jersey was launched in 1996. Local engineers from the Oyster Creek Nuclear Generating Station work with high school students, mentoring them and providing first hand experiences in real world situations. JEM provides teachers with curriculum materials to strengthen their subject areas and to show how careers integrate math, science and technology. Students in JEM compete in the annual National Engineering Design Challenge that brings students from over 2,000 high schools around the country. Over 75% of students in the JEM program go into engineering schools (Molkenthin, 2001).

The National Engineering Design Challenge (NEDC) is a cooperative program with the National Society of Professional Engineers and the National Talent Network. NEDC challenges teams of high school students working with an engineering adviser, to design, fabricate, and demonstrate a working model of a new product that produces a solution to a social need. NEDC is just one of several programs sponsored by JETS, the Junior Engineering Technical Society at the University of Missouri College of Engineering. The JETS mission is to guide high school students towards their college and career goals. The JETS program provides activities, events, competitions, programs, and material to educate students about the engineering world. It is a partnership between high schools, engineering colleges, corporations and engineering societies across the country (JETS, 2001).

JETS sponsors the Tests of Engineering Aptitude, Mathematics, and Science (TEAMS) competition enabling high school students to learn team development and problem-solving skills using classroom mathematics to solve real-world problems. JETS also sponsors the National Engineering Aptitude Search+ (NEAS+), a self-administered academic survey that enables individual students to determine their current level of preparation in engineering basic skills subjects like applied mathematics, science, and reasoning, and encourages tutoring and mentoring (JETS, 2001).

Common characteristics of science and engineering programs that utilize mentoring include tutoring, real-world problem solving, teamwork, activities, events, competitions and materials for educators. These programs often match students with mentors of their same ethnicity, gender or disability. The mentoring experience is often long-term continuing through student's college years. Other characteristics of some programs include peer mentoring, summer camps, pre-college instruction, and the use of technology. These characteristics will be considered during the evaluation of the Texas Aerospace Scholars program.

Mentoring Gifted High School, Female and Minority Students

The term mentor is believed to have originated with Homer’s Odyssey. Before embarking on his journey Ulysses gave the care and education of his son into the hands of his wise friend Mentor. A mentor has since then signified a well-respected teacher who can provide intellectual and emotional counseling to a younger individual (Casey & Shore, 2000). In today’s world, a mentor (usually an adult) acts as a guide, role model and teacher to a younger individual in a field of mutual interest. This section will give an overview of the literature on the benefits of mentoring gifted scholars in particular.

Scientist biographies and interviews have shown that mentoring is one of the most important influences on gifted scholars’ vocational and emotional successes. One quarter of 56 space scientists surveyed by Scobee and Nash (1983) reported that mentors played a critical role in the evolution of their interest, and were one of three most highly recommended experiences for students.

Research on mentoring gifted adolescents has shown that

gifted students value a mentor’s social and vocational modeling just as

much as the intellectual stimulation it provides (Casey & Shore, 2000).

Gifted scholars have a thirst for knowledge, move at an accelerated pace

and locating a mentor who can challenge them to explore their interests

provides the excitement and motivation that is often missing in the classroom.

Gifted students have an easier time relating to adults

because of their advanced cognitive abilities. They need role models with

whom they can relate to their own experiences and give them guidance and

advice in ways of handling their intense work ethic, intellectual needs

and drive to make the world a better place. Hands-on activities, work experiences

and role modeling are the most often quoted experiences that have helped

scientists and engineers choose and stick with their careers (Scobee and

Nash, 1983).

Research has shown that these students not only have the ability to work well with adults but also the capacity to learn from them. Gifted scholars may have non-traditional approaches to learning and interact more successfully with adults because of their advanced cognitive abilities. Gifted scholars have a higher degree of self-motivation and the ability to work independently. Working with mentors may fulfill a gifted student’s desire to become immersed in an area of interest, interact at an adult level, and develop specific talents (Casey & Shore, 2000).

Research has shown that there is a need for good vocational counseling for the gifted for a variety of reasons. Mentors can help gifted adolescents consider their future career choices. Gifted scholars seek out information regarding why people work, the lifestyles related to high-level occupations and moral concerns (such as risk-taking and delayed families) associated with certain careers. They must consider the impact of such high aspirations and the educational investment they must make in choosing a higher-level career (Casey & Shore, 2000).

High-level occupations (such as science, engineering and technology) make heavy intellectual and lifestyle demands, require creativity, and the ability to deal with problems that do not have known solutions. Mentoring can enhance gifted students experiences with intellectual risk-taking and self-directed learning. The opportunity to develop high level thinking skills, problem solving techniques and inquiry-based learning can be facilitated through mentoring programs (Casey & Shore, 2000).

Research has shown that gifted scholars have a distinct feeling of isolation in their communities where being smart is not necessarily equated with being popular. Gifted scholars seem to require emotional support as well as career advice (Bennett, 1997). Mentors can provide them with examples of ways that they have learned to deal with these tendencies in their own lives. Mentors can also help students evaluate their talents realistically, for gifted adolescents often feel they have to hide their talents in order to gain peer acceptance (Casey & Shore, 2000).

When the mentoring experience is well structured and the mentor is well suited to for the student, the relationship can provide the gifted student with encouragement, inspiration, and insight. One of the most valuable experiences a gifted student can have is exposure to a mentor who is willing to share personal values, a particular interest, time, talents, and skills (Berger, 1990). Mentoring allows students to learn new skills and check out potential career options. The relationship is a dynamic one that depends on interaction, the mentor passing on values, attitudes, passions, and traditions to the gifted student (Berger, 1990).

Gifted students generally have a variety of potentials as they enjoy and are good at many things (Berger, 1990). The wide range of interests, abilities and choices available to these students is called ‘multi-potentiality’ (Kerr, 1990). Since gifted scholars often have multiple interests and potentials they may require substantial information regarding career options. In high school, gifted scholars have been seen to have decision-making problems that result in heavy course loads and participation in a variety of school activities. These students are also often leaders in school, community, or church groups.

Parents may notice signs of stress or confusion regarding college planning while students are maintaining high grades. Kerr (1990) notes a variety of interventions for these high school students including: vocational testing, visits to colleges, volunteer work, internships and work experiences with professionals in an area of interest, establishing a relationship with a mentor in the area of interest, and exposure to a variety of career models.

Mentor relationships with professionals seem to be highly suitable for gifted adolescents, particularly those who have mastered the basics of high school academics. Many of these students are good enough that they are excelling in their schools because they can memorize subject material rather than really understand it. They may not know how to study at all. These students need to learn how to set priorities and establish long-term goals something mentors can help them to learn how to do (Kerr, 1985).

Gifted scholars have more career options and future alternatives than they can realistically consider. Parents often notice that mentors have a maturing effect on these students, they help them to develop a vision of what they can become, find a sense of direction, and can help them focus their efforts (Berger, 1990).

School personnel and parents may overlook a gifted scholars need for vocational counseling, assuming they will simply succeed on their own (Kerr, 1981). Often parents assume that career choices for gifted students will take care of themselves. They may assume that students will choose a career in college and that there is no pressing need for career planning. However studies of National Merit Scholars, Presidential Scholars and graduates of gifted education programs have shown that gifted students may have socio-emotional problems and that their needs may differ from other students (Berger, 1990).

The benefits of mentoring gifted students include the positive impact of mentoring for students struggling with their multi-potentiality, their need for adult role models, the desire to explore a variety of career options, with aid in the setting of goals and priorities, and for emotional support with feelings of isolation. Gifted scholar characteristics that can aid in the development of a successful mentoring relationship include self-motivation, a desire to interact with adults for intellectual stimulation, and interest in a variety of topics and careers.

Research indicates that special intervention programs for girls in math and science can make a difference. Six months after attending a one-day career conference, girls’ math and science career interests and course-taking plans were higher than prior to the conference (American Association of University Women, 1992). Three years of follow up of an annual four-week summer program on math/science and sports for groups of average minority junior high girls found they increased their math and science course-taking plans increased an average of 40% and are actually taking the courses (American Association of University Women, 1992).

Two and a half years of follow-up of a two-week residential science institute for minority and white high school junior girls (already interested in science) found that the program decreased the participants stereotypes about scientific professions and helped to reduce their feelings of isolation. The program also helped to solidify participants' decision to choose a career in math or science (American Association of University Women, 1992).

Research and case studies focusing on mentors and mentoring often cite the effects of the mentor in terms of career choice and career advancement, especially for young women and minority students (Kerr, 1983). Kaufmann's (1981) study of Presidential Scholars from 1964 to 1968 focused primarily on the nature, role, and influence of the students’ most significant mentors. The most frequently mentioned benefits to having a mentor were having a role model, support, and encouragement. The students noted that their mentors set an example for them, offered them intellectual stimulation and communicated to them an excitement about learning. Kaufmann's research highlighted the importance of mentors for gifted young women. The study was conducted 15 years after the students graduated from high school, and indicated that when the women’s salaries were equal to those of the men, the women had had at least one mentor.

Student’s self-confidence and aspirations grow with mentoring relationships, especially for students from disadvantaged populations (McIntosh & Greenlaw, 1990). Mentor programs throughout the United Stated match bright disadvantaged youngsters with professionals. Students learn about the professional’s lifestyle as well as their profession and the education that precedes the job. These relationships often extend past the boundaries of schools where mentors often become extended family members and even colleagues (McIntosh & Greenlaw, 1990).

Introduced in April of 2001, a new U.S. House bill, called the ‘Go Girl Bill’ aims to encourage girls in grades 4-12 to pursue studies and careers in science, mathematics, engineering, and technology. Services provided under the bill would include tutoring, online and in-person mentoring, and underwriting costs for internship opportunities. Specifically the bill aims to encourage girls to major and plan for careers in science, mathematics, engineering, and technology at an institution of higher education. The bill would provide academic advice and assistance in high school course selection and educate parents about the difficulties faced by girls to maintain an interest in and desire to, achieve in science, mathematics, engineering, and technology, and enlist the help of the parents in overcoming these difficulties. Services provided would include tutoring and mentoring relationships, both in-person and through the Internet.

The bill would also provide after-school activities and summer programs designed to encourage interest, and develop skills, in science, mathematics, engineering, and technology. It would also support visits to institutions of higher education to acquaint girls with college-level programs, and meeting with educators and female college students to encourage them to pursue degrees in science, mathematics, engineering, and technology (U.S. House, 2001).

Clearly the impact of mentoring on minorities and young women is just as critical as the impact of mentoring on gifted scholars, perhaps even more so. The new bill in the U.S. House supports the research in recent years that illustrates the positive impact interventions and mentoring have on young women and minorities. Mentors give them intellectual stimulation and communicate to them an excitement about the field of science. Their self-confidence and aspirations grow, and feelings of isolation are lessened.

Implications for Project

The review of literature shows the potential of utilizing intervention programs and mentoring to encourage gifted students to consider careers in engineering, science, math and technology. The use of computer technology is also important underscoring the decision by NASA to utilize a Web-based course and a variety of computer tools and software. Variables that will be assessed in the program evaluation include characteristics noted in the recent literature on science and engineering intervention programs such as the hands-on activities and projects, interaction with professionals, pre-college academic course, use of technology, workshop experience, and team competition.

The findings also underscore the impact of mentoring gifted

scholars, women and minorities. It supports the intuitive decision of JSC

instructional designers in choosing mentoring as the support system for

sustaining scholar’s interest in science and engineering. TAS provides

mentors on two levers of professional development – senior engineers already

into their career and college students who have chosen a career progression,

which will lead to a science or engineering career. The program evaluation

will assess the specific characteristics of mentoring including adult and

peer mentoring (engineers and co-ops), the use of technology (on-line mentoring),

the workshop experience (face-to-face mentoring), academic tutoring (student

project assessment by mentors) and long-term mentoring (continued guidance

by mentors for one year). Assessment measures of all scholars, and of female

and minority scholars, will be dependent variables in the program evaluation

study.

Distance Education

A variety of distance education venues exist for high school students, including both virtual schools and educational Web-sites. This section begins with a discussion of the use of distance education with high school students and the benefits of using the Internet over other forms of distance education. This section continues with an overview of virtual schools and educational Web-sites, and concludes with a section on Web-based science programs and a section on Web-based mentoring programs. Characteristics of model programs will be considered for program evaluation and continuous program improvement.

Distance education for gifted students

Research has shown that gifted adolescents have a high degree of self-motivation and the ability to work independently (Casey & Shore, 2000). Gifted students can take advantage of independent distance education programs because they need less hand-holding to accomplish the reading and research projects they must complete on their own.

Distance education removes the impediments created by a student’s geographic location. Independently motivated students can participate in distance learning and mentoring experiences (have access to data, educational activities, knowledge and resources) without being near the center of learning. Scholars do not have to be residents in large cities to have learning experiences that only take place in large population centers. Selected outstanding scholars from across large regions can easily work together. Distance education also breaks down many of the stereotypes seen in face-to-face classrooms such as race, social status and gender. Distance education can provide a common experience for students with similar characteristics and needs.

Distance education is well suited to the characteristics of gifted scholars. Gifted scholars are generally self-motivated, independent, curious, hard-workers and have experience using technology. While other students may be interested in pursuing a distance program, lack of access, time, and ability hinders their ability to persist. Only the most motivated will stay with the course, as distance learning is often more difficult than regular course work. Distance courses can be seen as too time-consuming, too difficult, or too much competition with their other activities for regular students (Casey & Shore, 2000).

Some of the advantages of using Web-based distance education include that students can learn almost any time and any where and about almost any topic. Students use Web resources to create real-life projects tailored to their learning styles. Student-to-student peer work-groups allow students a measure of interactivity with other students. Most virtual schools have formal course descriptions and assessments so students know what is expected of them. Some allow students access to their own online portfolios. There is age and demographic diversity found in most virtual schools. Disabled students can participate from their homes. There are few racial or cultural barriers visible on-line. Rural area students have access to the same wide range of courses available to urban or suburban schools (Tuttle, 1998).

There are limitations to the on-line distance education environment. Families may not be able to afford the cost of the hardware and software needed to complete the on-line activities (computer, modem, Internet access and tuition). However, many students who do not have a computer in the home can still participate in distance programs through local school or library facilities if their interest warrants the extra effort. Some virtual schools require video-conferencing equipment and CD-ROM players in addition to other hardware, which may be another limiting factor. It seems clear that at this time distance learning on the Web is not the answer for all students (Tuttle, 1998) however it may be the answer for students who are self-motivated and have initial expertise in the use of technology.

Web-based education programs

Examples of virtual schools (or cyberschools) are beginning to become more common in the United States, with large numbers of students taking advantage of the ease of the Web as an alternative method of learning. This section describes seven virtual schools for high school students.

In Eugene, Oregon, the Lane County School District Cyberschool features courses that last from two and a half weeks to two semesters long. The instructors e-mail course syllabuses to students URLs for Web-sites and a book list. A class listserv is used to exchange messages with others and the teacher. Additional book lists, Web-sites, real audio lectures and expert’s e-mail addresses are offered to students. Students earn full high school credit for these classes (Yahoo, 2001).

In Moad, Utah, the Electronic High School combines technology with classroom courses. The curriculum is text-based and includes Internet resources. Each course last one semester (a quarter of the year) and students complete exercises and take tests on-line, using e-mail to submit their work. Students receive full credit and can choose to take all their courses on-line. Each course costs $55 (Yahoo, 2001).

In Lake Grove, N.Y., the Babbage Net School has students meet in a chat room twice a week with their instructor. The instructor asks questions and students respond in real-time. A file cabinet on-screen contains worksheets, Web-sites, assignments, tests, sounds, images and any other material provided by the instructor. Assignments are submitted by e-mail. The tuition for a full year course is $1500 (Yahoo, 2001).

In British Columbia, Canada, the Netchako Electronic Busing Program is an individualized learning program that began as a support network for home schooled students. Parent and on-line teachers create a learning plan for each student based on district learning outcomes. Families are provided with a computer, software and access to e-mail and the Internet. The courses are free (Yahoo, 2001).

In Los Angeles, California, the Dennison On-line Internet Academy is a private school registered with the California State Board of Education. The teachers are university professors and the curriculum is for high school students. Students select research topics and work at their own pace. Students draw upon multimedia software, the Internet and other resources. Students report daily to their instructors via e-mail and chat sessions. Tuition is $3600 a year (Yahoo, 2001).

The Willoway Cyberschool is a private 5-8 grade video-conferencing virtual school that offers on-line courses in many disciplines to students across the country. Students spend 40 minutes daily in a virtual video conference class, 40 minutes a day researching online and 20 minutes a day talking with peers. Teachers help students take charge of their own learning, do problem solving and become technically literate. Projects include Hyperstudio stacks, designing Web pages, constructing models and writing reports. Individual password protected assignments are accessed by students. The cost is $2,250 a year (Yahoo, 2001).

The Virtual High School (VHS) located in twelve U.S. States, Jordan and Germany is funded by a grant from the U.S. Department of Education. VHS provides content through the Internet utilizing teacher designed labs and multimedia courses. Students receive guidance from teachers and are evaluated by e-mail, group discussion, on-line evaluation and projects. A wide variety of courses are offered. Initially begun in 1998, 29 courses were first offered from grades 9-12, by the second year, 40 courses were offered. Students generate questions, design and create projects, work in teams and work at a fast pace. VHS combines motion video with music and sound in several of its courses (Hammonds, 1998).

Common characteristics of these virtual schools include electronic mail, on-line chats, bulletin boards and listservs, Internet resources, archived resources, and any time, anywhere access. Students generally need a personal computer, CD-ROM and Internet access without firewalls. The characteristics of model virtual schools will be studied during the evaluation of the TAS program. A review of Web-based educational programs gives further insights into key factors to consider for program evaluation and continuous improvement.

Web-based science education programs

This section begins with an overview of ten Web-based science education programs, several of which are sponsored by NASA. This section concludes with a comparison between the ten programs and a recent research study that reviewed over 400 science, math and technology education Web-sites.

Imagination Place! in KAHooTZ is an interactive on-line club for children interested in the world of invention and design. Created by the Center for Children and Technology (CCT), Imagination Place is an electronic setting where students investigate technology and invention in their everyday world through activities on and off the computer, and analyze problems in their daily lives and stretch their imaginations to come up with inventive solutions. Students use technology to create their innovations and chat with club members around the globe about their designs (CCT, 2001). "You can easily use it as an assessment tool, to see the processes that they've gone through in putting it together, to understand what they've been thinking and also to assess their level of understanding of the topic," says one teacher from Melbourne (CCT Testimonials, 2001, para.4)

NASA’s Classroom of the Future: Exploring the Environment poses situations students must solve collaboratively using Internet and technology tools and skills. NASA’s Classroom of the Future: Earth Science Explorer poses real world questions to students who must work collaboratively using research tools and problem solving techniques (Classroom of the Future, 2001). At the Center for Educational Technology's International Space Station Challenge students find activities and real world challenges that allow students to investigate and solve open ended problems (Center for Educational Technology, 2001). Cooperative learning and collaboration with experts highlight many of NASA's science education sites on-line.

Amazing Space is a set of Web-based activities primarily designed for K-12 students and teachers developed by the Hubble Space Telescope Institute. The lessons are interactive and include photographs taken by the Hubble Space Telescope, high quality graphics, videos, and animation designed to enhance student understanding and interest. Activities include comet building, investigating black holes, playing with the building block of galaxies, solar system trading cards, training to be a scientist by enrolling in the Hubble Deep Field Academy, and creating a schedule for the Second Servicing Mission to upgrade the Hubble Space Telescope (Amazing Space, 2001).

The NASA Quest Project is a resource for teachers and students who are interested in meeting and learning about NASA people and the national space program. NASA Quest includes on-line profiles of NASA experts, live interactions with NASA experts each month, and audio and video programs delivered over the Web. Quest also offers lesson plans and student activities, collaborative activities in which kids work with one another, a place where teachers can meet one another, and an e-mail service in which individual questions get answered. Frequent live, interactive events allow participants to come and go as dictated by their own individual and classroom needs. These projects are open to anyone, without cost (NASA Quest, 2001).

The Observatorium, from the Learning Technologies Project, is a science resource site that includes some Java enabled simulations as well as some Shockwave tutorials for students to explore in or out of the classroom (Learning Technologies Project, 2001). Links to a variety of on-line primers and tutorials for students can be found at the Lunar and Planetary Institute along with a series of hands-on activities in planetary geology and an Earth/Mars comparison project for students and educators (Lunar and Planetary Institute, 2001).

Specific Web-based student involvement programs get students to participate in actual scientific research, collecting real data, using actual research facilities, and working on scientific projects. The GLOBE Program brings together students, teachers, and scientists from around the world to work together and learn more about the environment. By participating in GLOBE, teachers guide their students through daily, weekly, and seasonal environmental observations, such as air temperature and precipitation. Using the Internet, students send their data to the GLOBE Student Data Archive. Scientists and other students use this data for their research. Teachers integrate computers and the Web into their classroom and get students involved in hands-on science (GLOBE, 2001).

Explore Science showcases interactive on-line activities for students and teachers. Explore Science utilizes the Shockwave plug-in to create real-time correlation between equations and graphs helping students visualize and experiment with major concepts from algebra through pre-calculus. A large number of multimedia experiments include virtual two and three-dimensional simulations such as the Air Track activity that models a basic air track with two blocks. In the simulation, students can change the coefficient of restitution, initial masses, and velocities. Topics such as like electricity, magnetism, gravity, density, light, color, sound, the Doppler Effect, resonance, aerodynamics, interference patterns, heat, inertia, orbital mechanics, genetics, torque, time and vector addition are all simulated for students using interactive on-line computer tools (Explore Science, 2001).

Common characteristics of the ten reviewed science education Web-sites include the use of interactivity, multimedia, simulations, data collection, teamwork (over distance and with scientists), primers, tutorials, activities, on-line discussion sessions and e-mail with scientists, and real-world problem solving. Although these features were common to Web-based science education programs, they were not representative of Web-based educational materials in general.

The comparative study by Miodoser, Nachmias, Lahav, and Oren (2000) reviewed 436 educational Web-sites focusing on mathematics, science and technology. The study illustrates that most Web-based math, science and technology programs do not utilize the potential of telecommunications technologies to facilitate online collaboration and interaction.

According to Miodoser, Nachmias, Lahav, and Oren (2000) most educational Web-sites are still predominantly text-based and do not yet exhibit evidence of current pedagogical approaches to education including the use of inquiry-based activities, application of constructivist learning principles, and the use of alternative evaluation methods.

The originators, goals, target populations, pedagogical beliefs, and technological features of each site were found to be very different. 100 variables were looked at in four different dimensions. These dimensions included descriptive information, pedagogical considerations, knowledge attributes, and communication features. A team of science educators identified and evaluated the sites in 1998. Their findings included a breakdown of the sites originators, where academic institutions and museums comprised one third of the sites each, public and private organization the remaining third (Miodoser, Nachmias, Lahav, & Oren, 2000).

Most sites were aimed at junior and high school students, 62% were aimed at upper elementary, 22% at elementary, and 16% at higher education. The small number of sites that supported collaborative learning were all targeted at the high school level. The largest number of inquiry-based sites also targeted high school students. The museum environments supported the most collaborative, inquiry based and problem solving activities with contextual help and links.

While 93% of the educational sites supported individual work, only 3% supported collaborative work. Only 38% of the sites supported inquiry-based learning. Informational (65%) and structured activities (48%) comprised the most frequent instructional methods. A small percentage, 7-13% included open-ended activities, Web-based tools and virtual environments (Miodoser, Nachmias, Lahav, & Oren, 2000).

In all, 76% of the sites supported browsing and in 33% of the sites included question and answer tasks. Only 3-7% included more complex interactions, or the use of on-line tools. Interaction with people (mainly asynchronous) was found in only 13% of the Web-sites and feedback was only present in a small number of sites (Miodoser, Nachmias, Lahav, & Oren, 2000).

Information retrieval (52%) and memorizing (42%) comprised most of the sites educational approaches. Information analysis and inferencing were found in about one third of the sites. Only a few sites supported problem solving, creation, or invention. Of the researched sites, 83% relied in resources within the site, and only 31% provided links to other Web resources. Very few sites referred learners to experts and peers. Evaluation methods (standard or alternative) were rare (Miodoser, Nachmias, Lahav, & Oren, 2000).

Current pedagogical approaches support learning that requires student involvement in the construction of knowledge, interaction with experts and with peers, adaptation of instruction to individual needs and new ways of accessing student’s learning (Dick, 1996, Gagne, Briggs & Wagner, 1992). The expectation that science and math educational Web-sites would address constructivist principles was found not to be the case. Only 28.2% of the sites included inquiry-based activities and most were highly structures with control given to the computer rather than the learner. Only 2.8% supported any kind of collaborative learning. Few sites offered complex or online activities (3-6%), and few offered any form of feedback – human or automatic (5- 16%). Only a few offered links to online tools (12.8%), external resources (31%) or to experts (8.7%) (Miodoser, Nachmias, Lahav, & Oren, 2000).

The potential of the Internet to provide communication between learners and with instructors or experts was not seen in this study. Most resources presented were by e-mail (65%) while chat and other telecommunication between peers was almost non-existent. Distance work was supported in less than 2% of the sites. Only 4% included methods for synchronous communication (surprising since the popularity of chats and gaming is high among school age users of the Web). Features aimed at supporting learning communities were not found in any site. Interactivity (based on Java applets or Shockwave) were rare, with most interactivity resembling classic CAI transactions (i.e. multiple choice questions, assembling configurations, etc.) (Miodoser, Nachmias, Lahav, & Oren, 2000).

The results of this study show that while many institutions are taking advantage of the Web to increase access to science and math education, few utilize constructivist learning principles or promote interactivity. However, interactive simulations, interactions with experts and peers, creative real-world problem solving are common in the NASA and NASA-related sites located in the review of literature. Interaction with experts and peers is also an essential feature of mentoring programs.

Web-based mentoring programs

Mentoring is a key aspect of the TAS Program. This section provides an overview of Web-based mentoring programs for high school students, including one developed specifically for students interested in science, engineering and computing.

The Telementoring Young Women in Science, Engineering and Computing program used the Internet to provide high school students pursuing scientific and technical fields with electronic access to role models. On-line mentors offered career guidance and emotional support. Sponsored by the National Science Foundation, Telementoring was a three-year project from 1995-1998 that drew on the strengths of telecommunications technology to build on-line communities of support among female high school students, professional women in technical fields, parents, and teachers.

Using telecommunications, the project goals was to provide validation, advice, and support critical for young women making decisions about pursuing courses and careers in science, engineering, computing, and related fields. Other goals included enabling young women to work through their concerns and fears when considering further studies in technical and scientific fields, and to address the isolation young women experience in engineering, computing, and science classrooms.

Telementoring developed a telecommunications environment that enabled high school girls from six states in technical courses to communicate on an ongoing basis with successful women professionals and college students. These environments were designed to provide girls with the validation and advice not available in traditional educational settings. It also developed informational parent forums and an archive of equity materials to assist parents and teachers in supporting girls' pursuits in engineering and computing.

Students and mentors reported talking at least once a week online through e-mail and discussion groups. The predominant topics were college and future careers. Students predominantly had positive feelings about their mentors and that they were supported by them. Over 90% of the mentors stated they would do it again, and felt they had some impact on broadening their student’s horizons regarding career choices. In all, 28% of students felt that communicating with a mentor had altered in a positive way their perceptions about women in science. Some girls had thought that their mentors would be serious, non-sociable, older and not 'cool', and most were pleasantly surprised (Bennett, 1997). Of the students surveyed, 68% said they would like to have their mentor’s lifestyles. Students indicated they were more likely to apply for an internship, join a science club and join a study group. Students especially found that mentor impacted their views on science and technology when their personal hobbies (such as reading and music) were found to have a relationship to their career interests (Bennett, 1997).

The Electronic Emissary program was prototyped in the fall of 1992 and went online early in February, 1993. It is one of the longest-running Internet-based telementoring and research effort serving K-12 students and teachers around the world. Each team is comprised of a K-12 educator (teacher or parent), one or a group of K-12 students, one or more volunteer subject matter experts, selected by the participating educator and student(s), and an online facilitator who works with the Electronic Emissary Project at the University of Texas at Austin.

E-mail between students and their mentor is facilitated by graduate students in education at the University of Texas at Austin and other experienced educators with an interest in online learning. One mentor notes "I found that my role would range from being a ‘listener’ to ‘technician’ to ‘prompter,’ and, once, even as referee" (Figg, 1997). On-line evaluations ask team members are asked to describe their project's purpose, audience, and structure, what aspects of the project seemed to work well, and what they would do differently if they were to do the project again.

The International Telementor Program is an international project-based mentoring opportunity for students around the world. Since 1995, more than 16,000 students, mentors and teachers from around the world have participated in the program. Primary corporate sponsors include Hewlitt-Packard and Sun Microsystems. Their mission is to create project-based online mentoring support in math, science, engineering, communication and career education planning for students and teachers in classrooms and home-school environments with a focus on serving at-risk student populations. The International Telementor Program serves 5th-12th grade students, and college and university students, in targeted worldwide communities, through electronic mentoring relationships with business and academic professionals. Mentors have helped students with a wide-range of projects including studying whales in the North Atlantic, peregrine falcons, developing Web-sites, programming on-line quizzes, and assembling a statistical analysis for the performance of a professional baseball team.

Characteristics of these on-line mentoring programs include matching mentors with students based on gender and interest, career guidance and emotional support, parent forums and materials, and projects involving mentors, educators and students. These characteristics of on-line mentoring programs will be considered as variables under study in the program evaluation.

Implications for program evaluation and continuous improvement

Findings from the review of literature support the intuitive decision of NASA instructional designers in choosing distance education to impact the most number of students. Use of the Web to facilitate mentoring and sustain scholars’ interest in science and engineering, seem to be appropriate for self-motivated students who seek out and benefit from adult relationships.

The review of literature validates science education Web-sites that utilize multimedia, are highly interactive, allow for access to experts in the field, and provide inquiry based activities. Variables that will be assessed in the program evaluation include characteristics noted in the recent literature on Web-based distance education programs such as the use of on-line discussion sessions and e-mail, bulletin boards, listservs, Internet resources, and archived resources.

Characteristics specific to Web-based science education programs that will be considered during the program evaluation include the use of interactivity, multimedia, simulations, data collection, teamwork (over distance and with scientists), primers, tutorials, activities, and real-world problem solving, all based in constructivist learning principles that promote interactivity.

The review of literature regarding on-line mentoring programs illustrates the importance of matching on-line mentors with students based on gender and interest, offering both career guidance and emotional support, and involving students and mentors in activities and projects that encourage them to work together. These characteristics are not at all different from traditional mentoring programs except for the delivery method which in this case is the Internet, and reflect the same basic variables regarding mentoring to be considered in the program evaluation.

Program Evaluation

This section reviews literature on program evaluation to answer four key questions: (1) What is program evaluation? (2) What forms, approaches and types of evaluation are used for instructional programs? (3) What are the procedures for conducting a program evaluation of education programs, distance education and mentoring programs? and (4) How can issues of validity and reliability be addressed in program evaluation?

What is program evaluation?

The idea of formal evaluation has been around since as long ago as 2000 B.C. when the Chinese began a functional evaluation system for their civil servants. Since then, many definitions of evaluation have been developed. In 1994 the Joint Committee on Standards for Educational Evaluation described evaluation as the systematic investigation of the worth or merit of an object (Western Michigan University, 2001). According to The User-Friendly Handbook for Evaluation of NASA’s Educational Programs (2001, p. 5), "This definition centers on the goal of using evaluation for a purpose. Evaluations should be conducted for action-related reasons and the information provided should facilitate deciding a course of action."

Research aims at producing new knowledge, but is not necessarily applied to a specific decision-making process. In contrast, program evaluation is deliberately undertaken to guide decision making (Wolf, 1979). Research comes about from scholarly interest or academic requirement, and is an investigation undertaken with a definite problem in mind. Experimental research produces knowledge that generalizable; this is the critical nature of research. If the researcher’s results cannot be duplicated, they are usually dismissed. Little (or no) interest may attach to knowledge that is specific to a particular sample of individuals from a single location, and studied at a particular point in time (Wolf, 1979). In contrast, program evaluation seeks to produce knowledge that is specific to a particular setting. Evaluators concern themselves with evaluating a particular program in a particular location, with the resulting evaluative information being of high local relevance for the teachers and administrators in that particular setting. The results may have no scientific relevance for any other location. Scholarly journals generally do not publish the results of evaluation studies, since they rarely produce knowledge that is sufficiently general in nature to warrant widespread dissemination (Wolf, 1979).

The major attributes studied during program evaluation represent educational values, goals or objectives that we seek to develop in learners as a result of exposing them to a set of educational experiences. Learner achievements, attitudes, and self-esteem are all educational values. Traditionally, measurement in education is undertaken for the purpose of making comparisons between individuals with regard to some characteristic. In evaluation, it is not necessary or even desirable to make such comparisons. What is of interest is the effectiveness of a program (Wolf, 1979). In such a situation there is no requirement that learners be presented with the same tests, questionnaires or surveys. Resulting information from different sets of questions can be combined and summarized to describe the performance of an entire group. Therefore, evaluation and measurement lean towards different ends, evaluation toward describing the effects of a program, and measurement towards the description and comparison of individual performance. Evaluation is directed toward judging the worth of a total program, and also can be used for judging the effectiveness of a program for a particular group of learners.

NASA managers are encouraged to do program evaluation to communicate with stakeholders information about a program, to help improve a program, and to provide new insights about a program. When evaluating a program, information should be gathered on whether the program’s goals are being met and how the varied aspects of a program are working – the end being a continuous improvement process. Sanders (1992, p. 3) echoes this idea, "Successful program development cannot occur without evaluation." Sanders cites the benefits to both students and educators, including, improvement of educational practices and the elimination of curricular weaknesses, the recognition and support of educators, and prioritizing areas of need in school improvement.